Accelerate AI Model Training

The AceleMax® POD with the WEKA® Data Platform, is a rack-scale solution optimized for modern AI workloads. By combining AMAX’s advanced compute and networking technologies with WEKA’s high-performance storage platform, this solution eliminates data bottlenecks, ensures high GPU utilization, and significantly reduces idle time.

With a shared-memory architecture and up to 4,512GB of HBM3e GPU memory per rack, the AceleMax® POD accelerates the training of LLMs and Generative AI models.

Key Features of AceleMax® POD with WEKA® Data Platform

- Unified Solution: Turnkey compute, data storage, processing, and analysis for AI and HPC.

- Low-Latency Data Access: Direct GPU-to-storage paths enhance speed and efficiency.

- Scalable Compute Power: 8x NVIDIA HGX H200 GPU systems powered by 5th Gen Intel® Xeon® Scalable processors.

- Advanced Networking: NVIDIA Mellanox QM9700 switches provide up to 400Gbps InfiniBand connectivity.

- Flexible Storage: Integrated with the WEKA® Data Platform for high-performance storage and data management.

POD Specifications

AceleMax® POD with NVIDIA HGX™ H200

| Component | Specification |

|---|---|

| Networking | 4x NVIDIA Mellanox QM9700 switches |

| Storage and Data Management | WEKA® Data Platform |

| Compute Nodes | 8x NVIDIA HGX H200 GPU Systems |

| Compute Fabric | NVIDIA Quantum QM9700 NDR400 Gbps InfiniBand |

| Software | NVIDIA AI Enterprise |

Designed for Modern GPU Demands

AI development often encounters challenges such as GPU shortages and inefficient data systems, leading to significant idle time—up to 50%. The AceleMax® POD, integrated with the WEKA® Data Platform, solves these issues by providing low-latency data access and improving GPU utilization.

By eliminating data stalls, the AceleMax® POD:

- Maximizes Productivity: Keeps GPUs consistently fed with necessary data.

- Optimizes ROI: Reduces idle time and accelerates development cycles.

- Improves Efficiency: Enables faster model training and deployment, empowering enterprises to extract greater value from their hardware investment.

AMAX ServMax® A-1112G with WEKA® Data Platform

ServMax® A-1112G

- 1 x AMD EPYC 9004/9005 series processor support

- 24 x DDR5 4800 Mhz DIMM support

- 2 x M.2 socket support

- 3 x PCIe + 1 x OCP expansion in 1U rack server

- Internal 4 bay cage for mid bay SKU

- Up to 2 single-slot GPUs

Compact and Scalable Design: Featuring a dense 1U form factor with 12 front hot-swap bays and an optional 4-bay internal cage, AMAX’s ServMax® A-1112G maximizes storage capacity in a compact, space-efficient design suited for high-performance environments.

Industry-Leading Performance: Powered by the WEKA Data Platform and its high-performance WekaFS file system, the ServMax® A-1112G is engineered for today’s data-intensive applications in AI and technical computing. This solution delivers:

- Over 10x the performance of blade-based, all-flash, scale-out NAS.

- 3x the performance of locally attached NVMe SSDs.

These capabilities make it an excellent choice for organizations looking to accelerate demanding AI workloads.

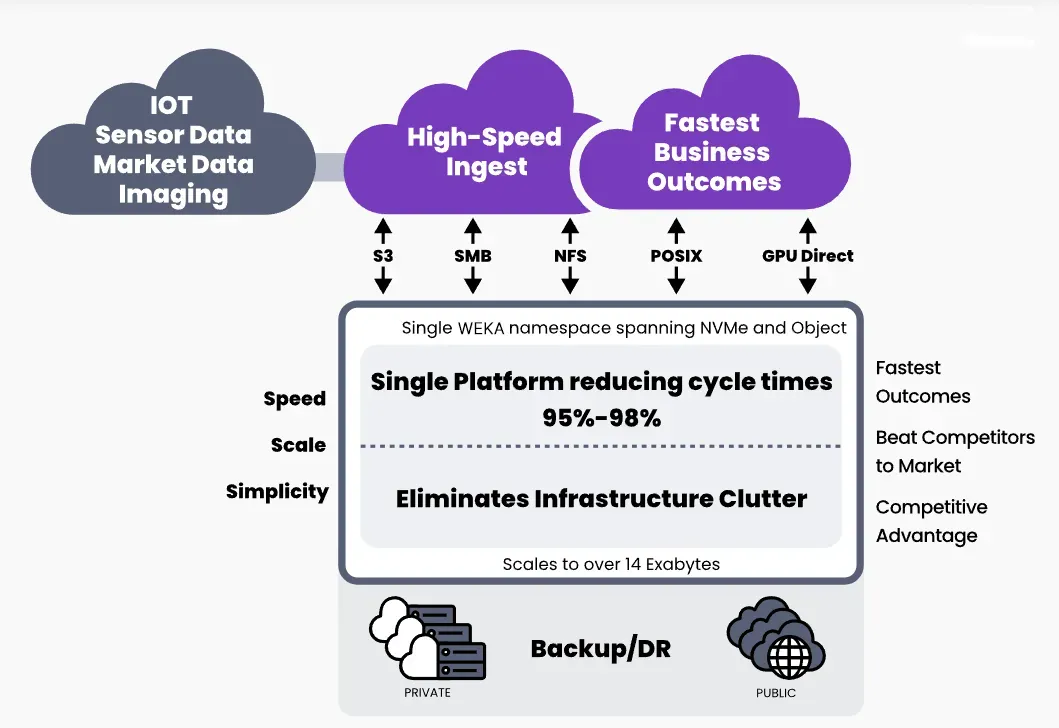

The WEKA Data Platform Difference

The WEKA® Data Platform is a high-performance software solution for data storage, processing, and analysis. Its pipeline-oriented architecture and parallel processing capabilities:

- Accelerate AI model training and inference.

- Enable predictive analytics and real-time data processing.

- Streamline data management for enterprise-scale workloads.

With the ability to handle high-volume, small-file I/O operations typical of AI, WEKA empowers organizations to drive informed decision-making and extract actionable insights from their data assets.

AMAX Engineered Solutions

The AMAX and WEKA solution delivers a powerful and efficient POD architecture designed for the needs of AI-driven enterprises and startups. By integrating advanced GPU clusters with a high-performance data platform, this solution tackles the core challenges of AI workloads. It empowers organizations to enhance performance, streamline operations, and scale effectively, enabling them to remain competitive in rapidly changing markets.