How Liquid Cooling is Reshaping Enterprise AI Data Centers

AMAX recently took the stage at the AI Expo in Taiwan to discuss a critical topic shaping the future of enterprise compute: cooling strategies for high-density AI infrastructure. As AI models continue to grow in complexity, the demands placed on data center power and thermal design have reached levels that traditional air cooling systems cannot support.

Thinking about transitioning to liquid-cooling? Let AMAX help you design and deploy a custom solution built for high-performance workloads.

Our presentation examined why liquid cooling is becoming a necessary evolution for AI data centers, what design and operational changes this shift requires, and how teams can begin deploying it in both new and existing environments.

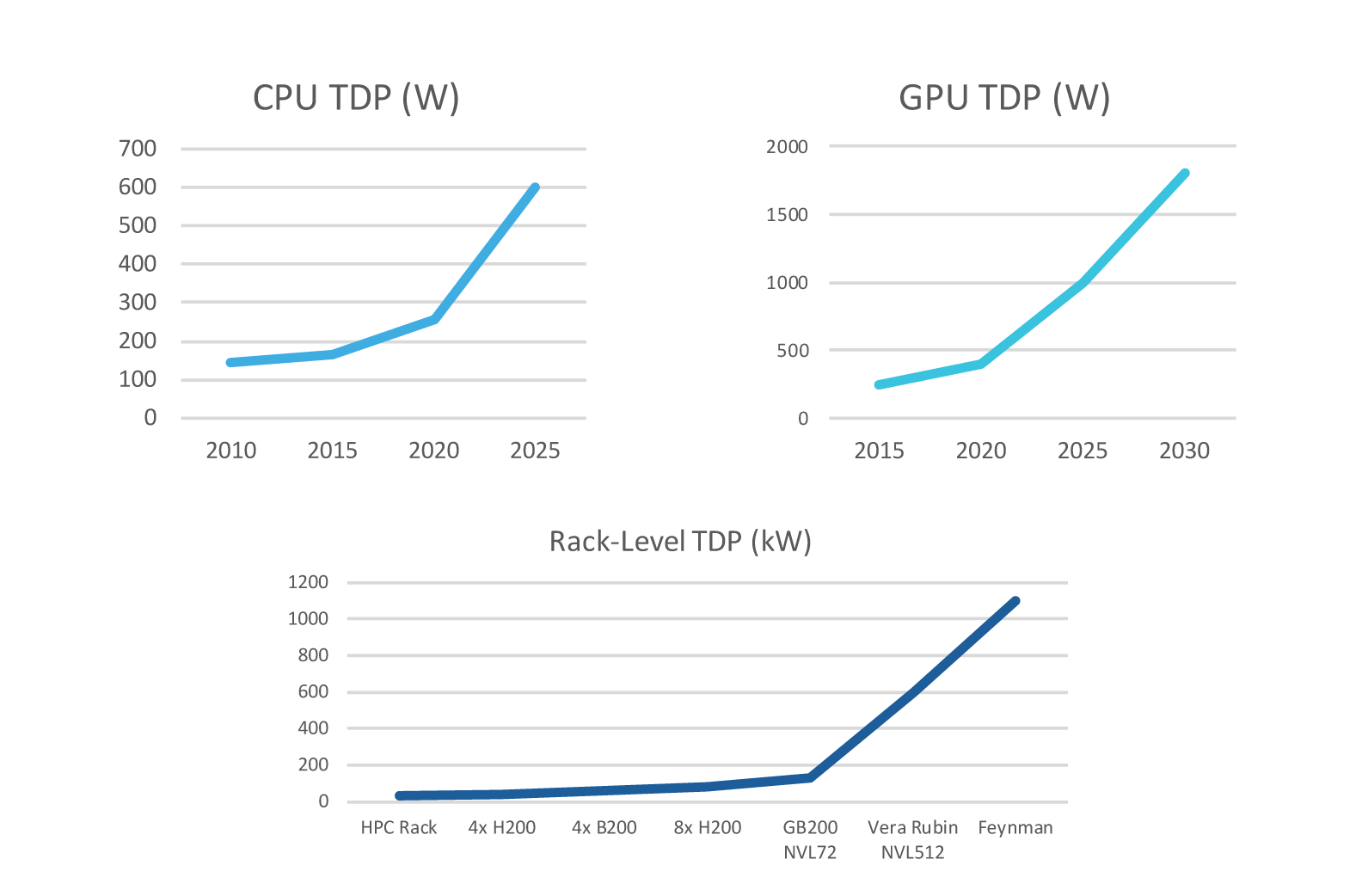

Rising Demands of AI Compute

Over the last several years, advancements in AI has pushed infrastructure requirements far beyond what was typical a decade ago. Large Language Models (LLMs) now span hundreds of billions to trillions of parameters, driving a significant rise in GPU density and overall rack power. It's no longer uncommon to see deployments requiring 50kW per rack or more. Thermal management has moved from a background concern to a primary constraint.

Conventional air-cooled systems were not built to manage this type of heat. As rack power grows, so does the urgency to adopt new cooling approaches that can maintain performance without expanding the physical footprint or overwhelming existing power budgets.

Liquid Cooling as a Strategic Solution

The average data center cooling system consumes about 40% of the center’s total power. - DataSpan

Liquid cooling uses high-efficiency fluids to transfer heat away from components more effectively than air. This allows data centers to increase compute density without sacrificing reliability or efficiency.

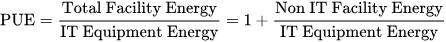

- Air Cooling: PUE 1.5–2.0, with only 50–70% of power used for compute

- Liquid Cooling: PUE 1.1–1.2, with up to 90% of power used for compute

In practice, organizations moving to liquid cooling often see Power Usage Effectiveness (PUE) values improve significantly, in many cases dropping to 1.1 or 1.2. This represents a much larger share of total energy going to actual computation. Reducing the burden on HVAC systems also helps manage long-term costs, particularly in regions where power availability is limited or prices are high.

Types of Liquid Cooling

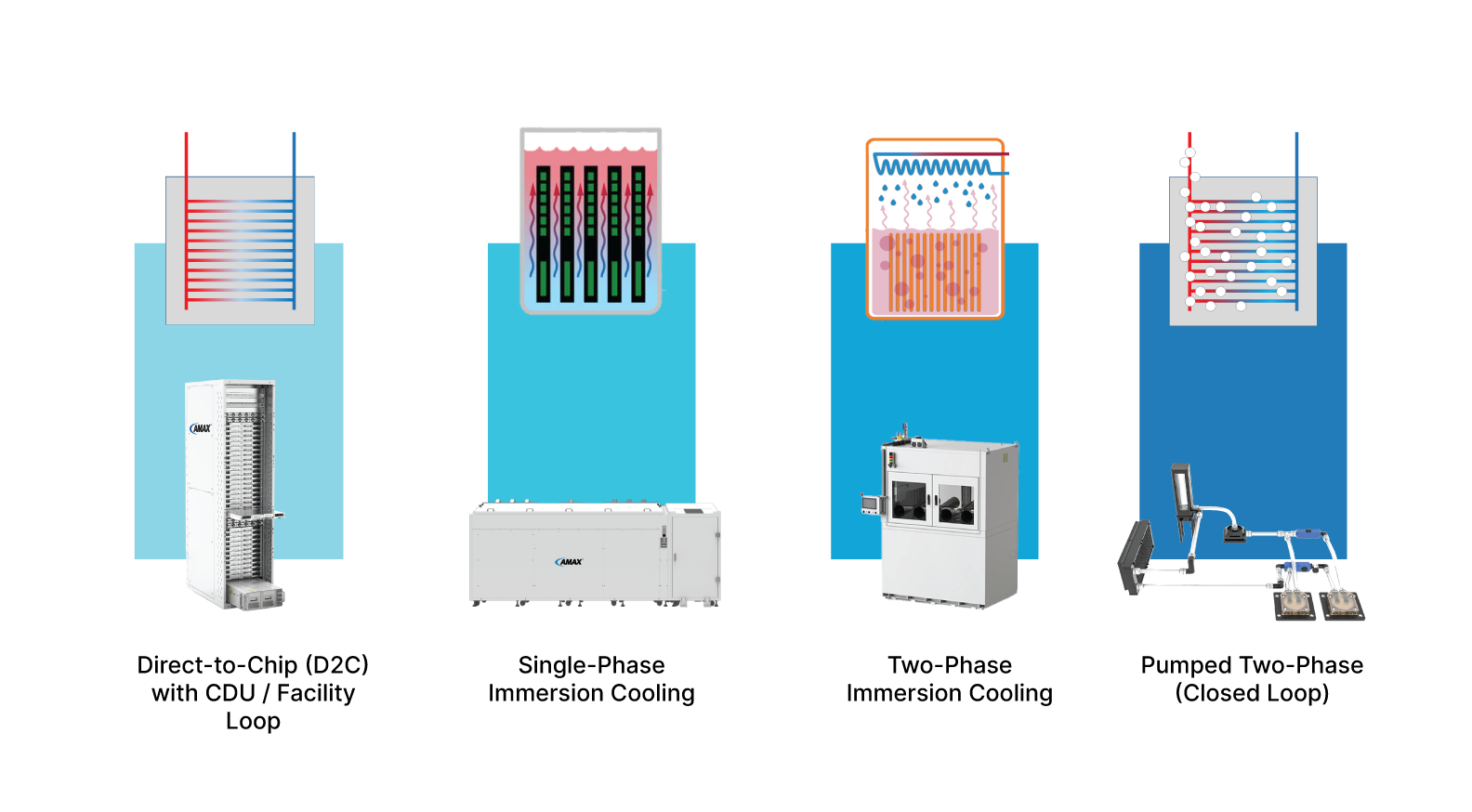

There are several liquid cooling architectures in use today, each suited to different deployment needs and infrastructure readiness levels:

- Direct-to-Chip (D2C) Cooling: This method uses cold plates attached directly to processors and GPUs. Warm liquid circulates through the plates to remove heat efficiently. D2C is often deployed with a cooling distribution unit (CDU) and a facility cooling loop. It is one of the most mature and widely adopted approaches in enterprise settings.

- Single-Phase Immersion Cooling: Servers are fully submerged in a thermally conductive, non-electrically conductive fluid. Heat is transferred from the components to the fluid, which is then cooled through a heat exchanger. This method provides excellent heat removal but may require rethinking serviceability and system design.

- Two-Phase Immersion Cooling: Similar to single-phase, but the fluid undergoes a phase change (boiling) at a lower temperature, absorbing more heat in the process. The vapor then condenses on a cooled surface, releasing the heat and returning to liquid form. This method offers very high thermal efficiency but comes with increased system complexity.

- Pumped Two-Phase (Closed Loop) Cooling: A sealed loop system that circulates a refrigerant through components. The fluid changes phase inside the system, enabling high-efficiency heat transfer without immersion. This architecture combines compact form factors with strong thermal performance, though it typically requires specialized components.

Each approach has different benefits and limitations depending on density targets, space constraints, and infrastructure maturity.

Power Availability is Becoming a Critical Limitation

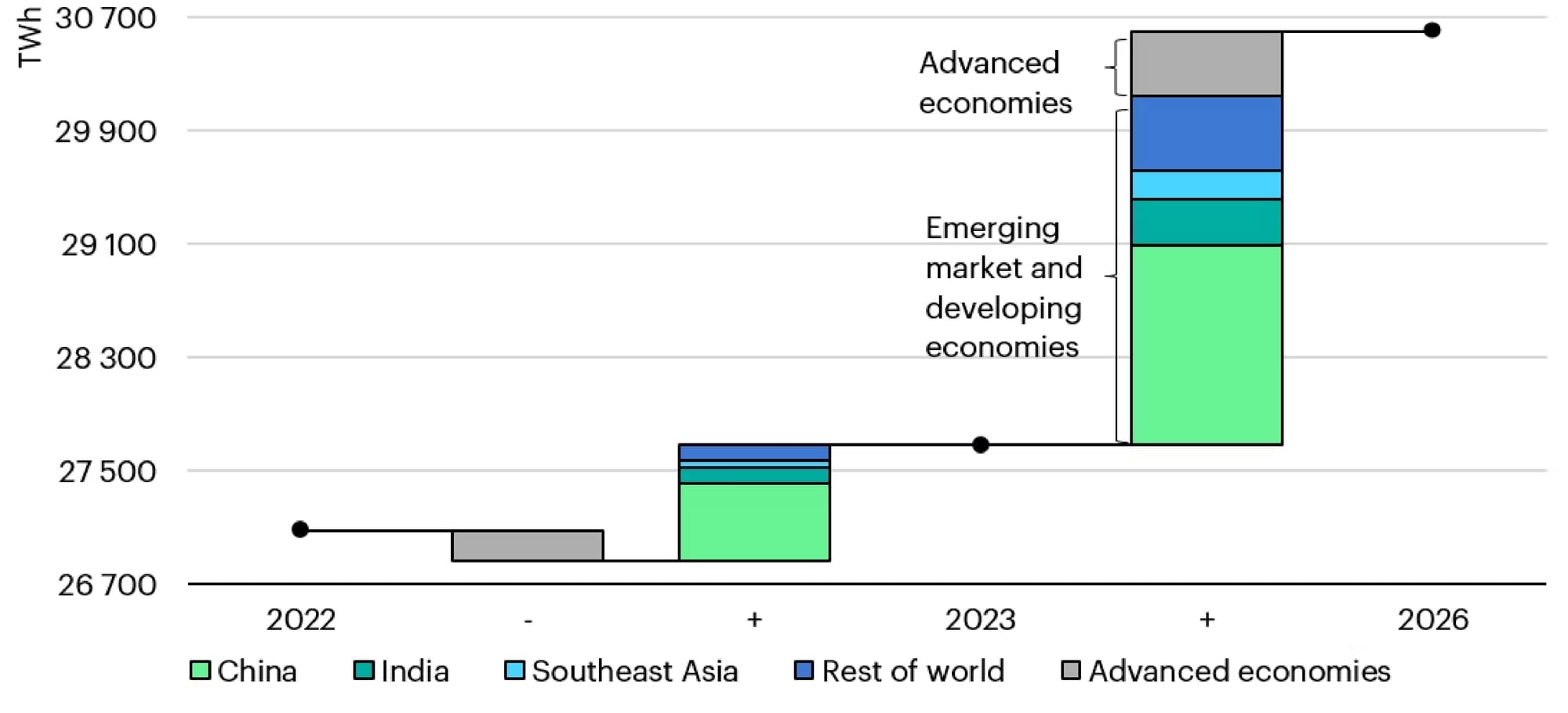

Demand for data center energy continues to climb. Projections show global data center power usage reaching 1,000 TWh by 2026, a level comparable to the total electricity consumption of Japan. In Ireland, data centers already consume more than 20% of the national power grid.¹

This level of usage introduces new constraints. Many organizations are now facing power caps or limited access to new utility infrastructure. Improving thermal efficiency is no longer about optimization; it is a requirement for growth. Liquid cooling reduces facility overhead and creates headroom for expanding compute capacity without requiring additional grid allocation.

Planning and Operational Considerations

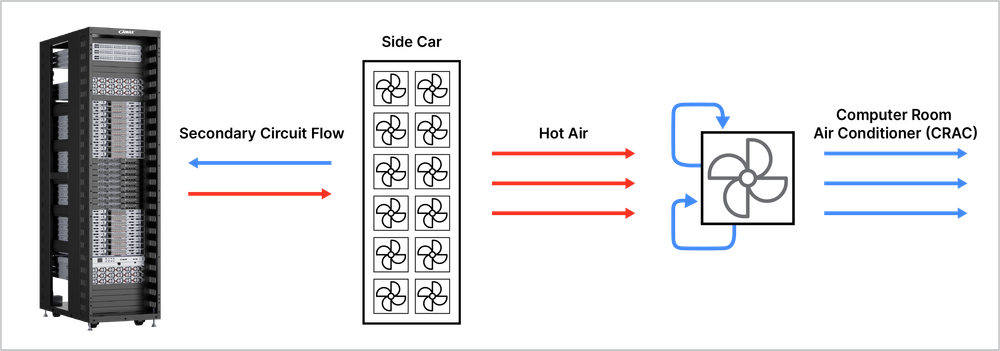

Deploying liquid cooling introduces a different set of engineering and operational requirements compared to air-cooled systems. Most current systems still use hybrid configurations, where liquid manages most of the heat load and air provides supplemental cooling. This means designing around dual thermal domains and ensuring compatibility at both the rack and facility level.

Maintenance expectations shift as well. Liquid cooling systems depend on clean fluid, stable pump performance, and leak prevention. This requires a preventive approach to service, as opposed to the traditional break-fix model used for air cooling, along with strong monitoring capabilities. Downtime from preventable failures becomes a greater risk when operating within tighter thermal margins.

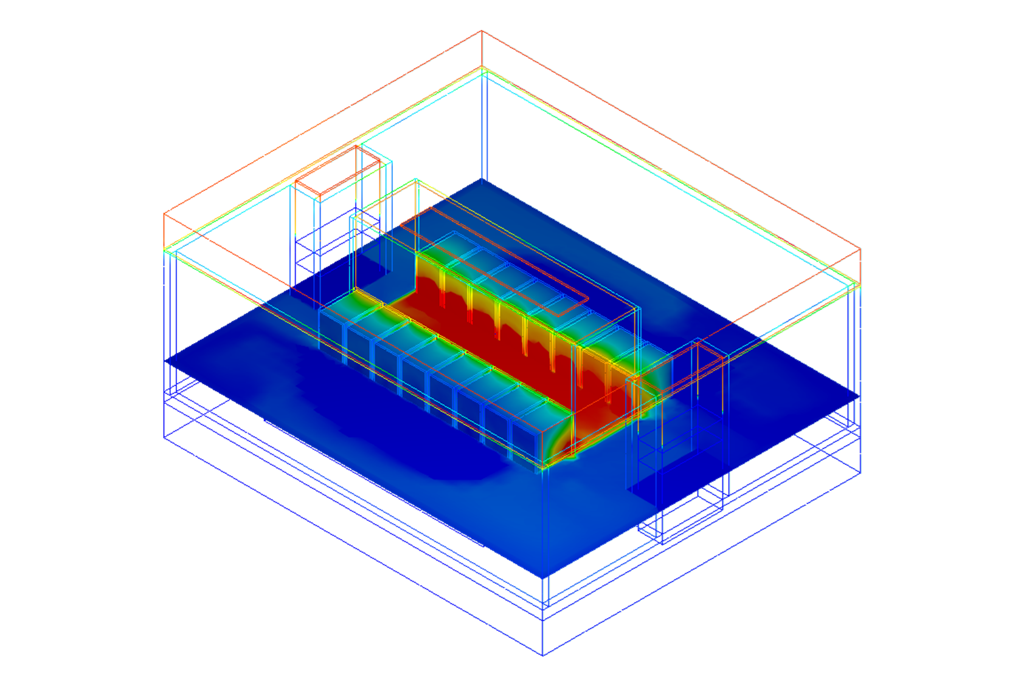

Custom engineering is often necessary to align cooling infrastructure with specific workloads and hardware. Variables such as flow rate, coolant pressure, and thermal load must be precisely modeled. Compatibility with existing data center infrastructure should be addressed early to avoid costly retrofits.

Standardization is still developing across the industry. Components like quick-disconnects, manifolds, and CDU interfaces often vary by vendor, which can make integration more complex. Partner selection becomes an important part of any successful deployment.

Pathways to Adoption

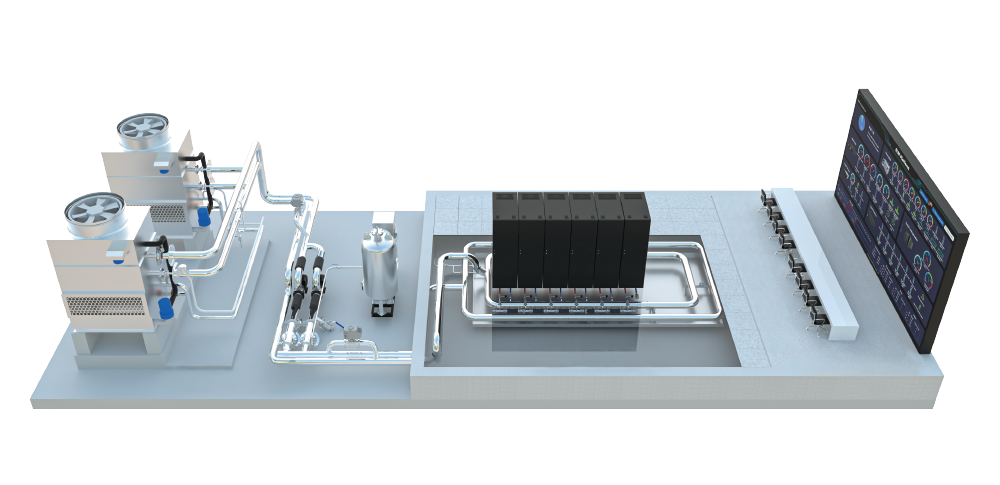

Organizations interested in liquid cooling do not need to commit to a full facility overhaul to get started. AMAX offers rack-level systems that include side-mounted radiator units (sidecars), allowing them to operate independently within air-cooled environments. These are well suited for proof-of-concept deployments or early testing.

For larger scale needs, our Compact Data Center Solution supports direct-to-chip liquid cooling with integrated power and monitoring. It is engineered for up to 0.5MW of high-density compute and can be deployed quickly in a wide range of environments.

These solutions are designed to help teams evaluate and adopt liquid cooling in practical steps, with the ability to expand as demand grows.

Preparing for What Comes Next

Cooling infrastructure must evolve alongside AI compute. Liquid cooling enables the energy efficiency, density, and reliability that high-performance AI workloads require.

AMAX brings years of experience designing and deploying liquid-cooled systems for customers building advanced AI and HPC environments. We support everything from early thermal modeling to final deployment, helping our customers make informed decisions and achieve performance goals without compromising power or operational limits.

Speak with our team of AI experts about your upcoming deployment today.