The Next Generation of GPUs

With the rapid developments in AI, staying up to date the the latest GPU hardware is crucial for any enterprise looking to leverage AI applications. MLPerf has recently shown their AI performance benchmarks for the latest NVIDIA B200, and H200 Tensor Core GPUs as well as the AMD MI300X Accelerators. These GPUs offer powerful compute capabilities catering specifically to AI workloads, ensuring businesses can meet their unique needs and scale effectively.

What is MLPerf and Inference v4.1?

MLPerf™ benchmarks, developed by MLCommons—a consortium of AI experts from academia, research, and industry—are designed to provide unbiased evaluations of training and inference performance across various hardware, software, and services. Conducted under strict conditions, these benchmarks ensure accurate assessments.

The MLPerf Inference v4.1 benchmark, for instance, measures performance across nine diverse tests, including image classification, text-to-image, object detection, medical imaging, natural language processing, and recommendation systems.

NVIDIA H200 GPU AI Benchmarks

The NVIDIA H200 Tensor Core GPU also achieved exceptional results across the board, including the newly introduced Mixtral 8x7B LLM benchmark which includes a total of 46.7 billion parameters, with 12.9 billion parameters active per token.

Mixtral 8x7B is a mixture of experts (MoE) model, a type of LLM that optimizes efficiency and performance by selectively activating only a subset of its experts for each task.

While the model consists of 8 experts, each with 7 billion parameters (totaling 56 billion), the MLPerf benchmark highlights the effective number of parameters used during inference—46.7 billion in this case, with 12.9 billion parameters active per token.

MoE models are increasingly popular for their ability to enhance LLM deployments, offering the versatility to handle a broad range of questions and tasks within a single deployment. Their efficiency comes from activating only a few experts for each inference, enabling them to deliver results faster than dense models of comparable size.

NVIDIA B200 GPU AI Benchmarks

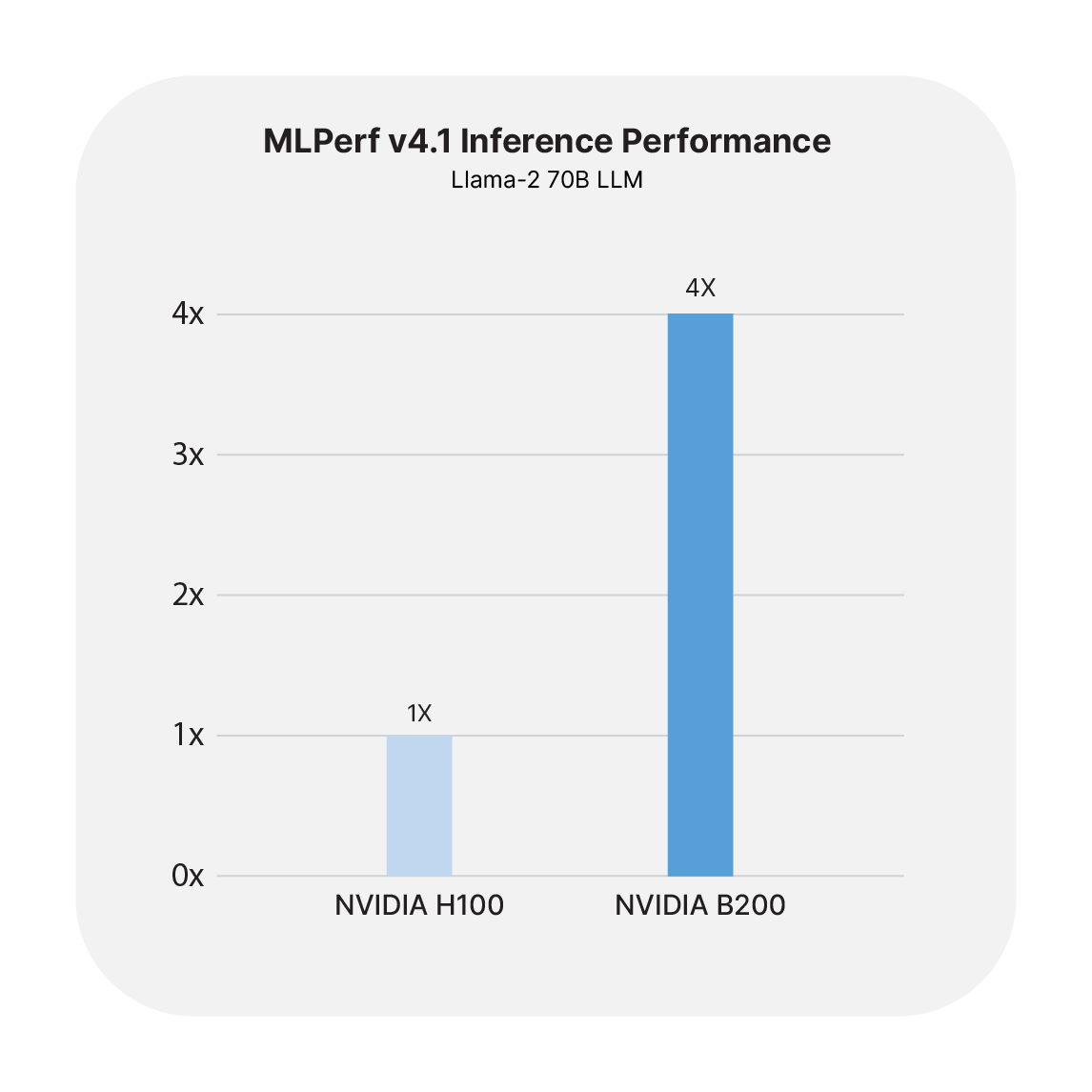

In the latest MLPerf industry benchmarks, Inference v4.1, NVIDIA platforms excelled across all data center tests. Notably, the upcoming NVIDIA B200 made its debut with up to 4x the performance of the NVIDIA H100 Tensor Core GPU on MLPerf’s largest LLM workload, Llama 2 70B.

This result is likely due to the platform's second-generation Transformer Engine and FP4 Tensor Cores which accelerate throughput for complex tasks such as AI inference.

AMD Instinct™ MI300X Accelerator AI Benchmarks

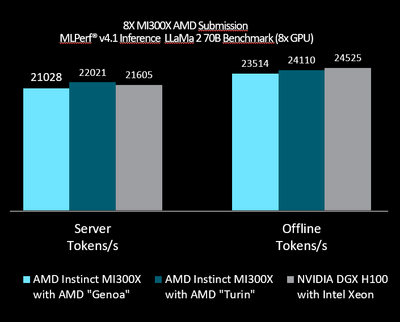

In the latest MLPerf Inference v4.1 benchmarks, AMD's Instinct MI300X GPU demonstrated impressive performance, coming within 2-3% of NVIDIA's DGX H100 systems when paired with AMD's 4th Gen EPYC CPUs. This close competition underscores the MI300X’s potential to deliver powerful AI performance, especially for businesses that are looking for flexibility in hardware choices and high memory capacity.

One of the key advantages of the MI300X lies in its 192 GB of HBM3 memory, allowing it to handle large-scale models like LLaMA2-70B on a single GPU, eliminating the need for complex multi-GPU configurations. This capability reduces network overhead and boosts overall inference efficiency, making it ideal for enterprises dealing with massive AI models.

For businesses seeking an alternative to NVIDIA GPUs, the AMD Instinct MI300X provides a strong combination of performance, scalability, and efficiency. Its ability to handle large models, paired with the cost-effectiveness of AMD EPYC CPUs, makes it a compelling option for enterprises aiming to optimize AI infrastructure.

Choosing the Right AI Solution for Your Business Needs

At AMAX, we understand that successful AI deployment goes beyond raw processing power—it’s about selecting the right solution for your specific needs. The NVIDIA H200 GPU offers versatility and is ready for immediate deployment, making it an ideal choice for businesses looking to scale quickly or meet pressing AI demands.

The NVIDIA B200, while demonstrating superior performance, is still on the horizon with uncertain shipment times. Whether you're prioritizing the immediate, adaptable power of the NVIDIA H200 or anticipating the peak performance of the NVIDIA B200, AMAX is here to guide you in choosing and implementing the best solutions for your business.