AMAX + Intel Better Together

The Future of AI Data Centers

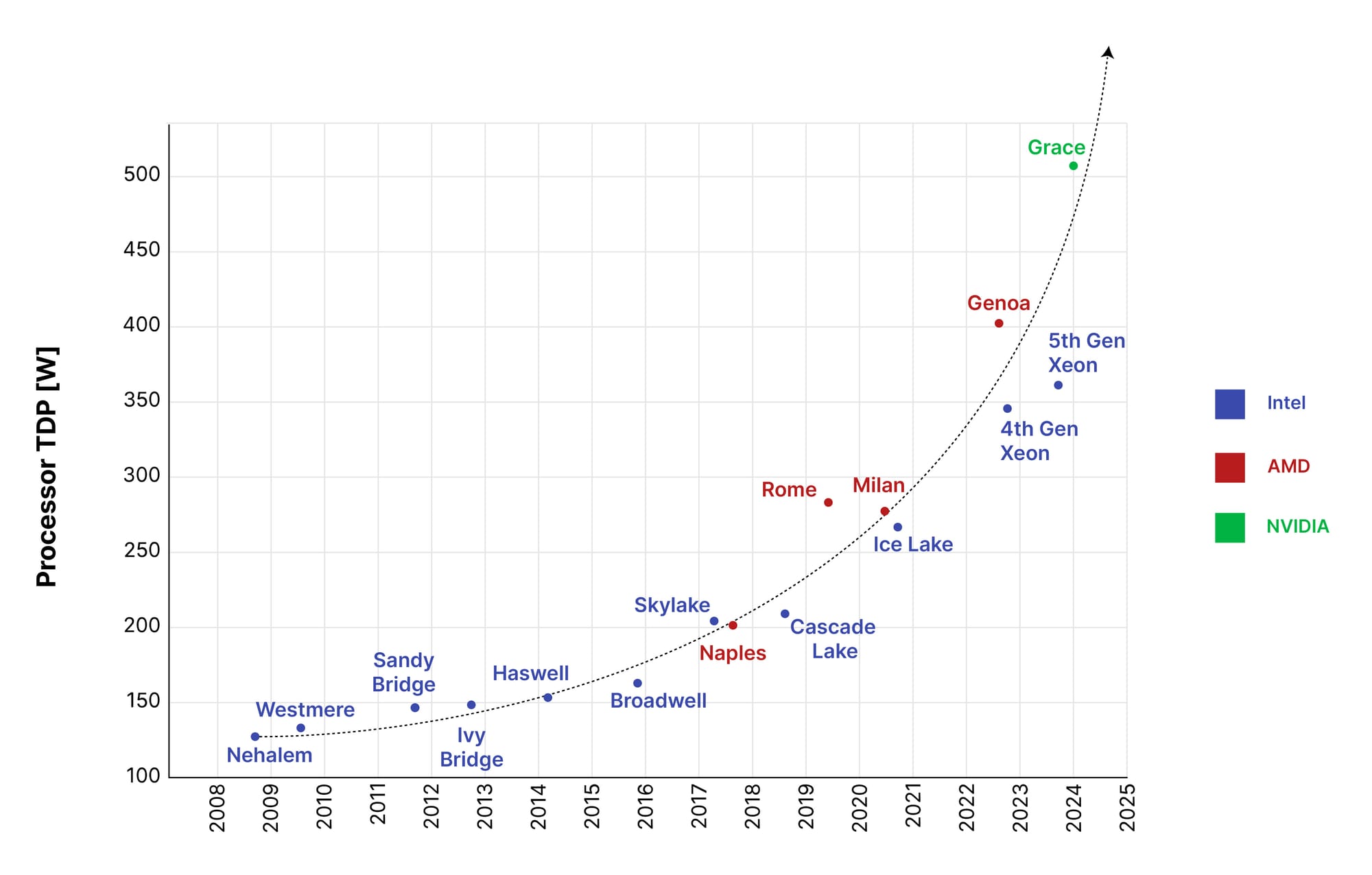

As AI-driven enterprises expand, so does the demand for advanced computing power. The pursuit of superior processing has led to a marked increase in processor TDP (Thermal Design Power). This shift underscores the importance of robust on-premises AI solutions, providing the necessary infrastructure and cooling to support the higher thermal and power requirements of cutting-edge chips such as Next Gen Intel® Xeon® Scalable Processors.

Explore the benefits of advanced liquid cooling and see the range of solutions we offer.

The graph below illustrates the rapid increase in processor TDP in recent years. As processors become more advanced, the heat generated becomes a more significant issue.

Processor TDP Over Time (2008-2025)

Going On-Prem with AMAX

At AMAX, our AI solutions powered by Intel are custom-designed for your enterprise’s specific AI and deep learning needs, ensuring efficient management and real-time tuning of AI hardware resources. Utilizing Intel® Xeon® AI Accelerators our on-premises infrastructure reduces data transport costs and network congestion, enhancing the efficiency of your AI workloads. Prioritizing security, our systems process sensitive data within the secure confines of your local network, safeguarding your proprietary information.

Next Gen Intel® Xeon® Scalable Processors

The Intel 5th Gen Xeon Scalable processor, is set to transform future data centers with its enhanced performance cores, delivering accelerated computing and reduced total cost of ownership. With the expected increase in heat generation from these high-performance cores and high-bandwidth memory, AMAX’s advanced liquid cooling technology becomes essential, enabling the new processor to maintain greater performance and efficiency. This is particularly beneficial for AI tasks, where sustained, high-intensity processing is common, allowing the Next Gen Xeon processor to outshine previous processors while managing thermal output effectively.

Advanced Liquid Cooling for AI

The evolution of chipsets with increasingly powerful processors such as Intel’s Next Gen Xeon Scalable processor, demands an escalation in wattage which in turn generates significantly more heat during operations. The intricacies of handling increased thermal output are critical to maintain the performance and longevity of these processors.

AMAX’s advanced liquid cooling offers an innovative solution to the excess heat modern processors generate. Our thermal engineers excel at customizing systems for Direct-to-Chip (D2C) cooling and immersion cooling, tailoring thermal management solutions to meet the specific needs of your on-premises AI infrastructure. Advanced cooling solutions not only addresses thermal challenges but also reduce data center energy cost. By pairing AMAX’s custom liquid cooling design with the AI optimized Intel processors, a foundation for a high-performance, reliable on-premises AI infrastructure is established.

Getting Started with AI

Initiating your on-premises AI deployment begins with a detailed consultation and assessment by AMAX. Our solution architects dive into your enterprise’s specific needs to facilitate a smooth transition to a custom AI infrastructure, considering space, compute, networking, and any additional requirements. The assessment covers data volume, processing capabilities, and security protocols, shaping the blueprint of your on-premises AI solution.

Enterprises looking for a more tailored AI experience can benefit from implementing Retrieval-Augmented Generation (RAG) on-premises. RAG) combines the capabilities of generative AI with advanced embedding techniques to search an external dataset for relevant information. It leverages a pre-trained Large Language Model (LLM) to refine the extracted data into precise, tailored responses.

RAG acts as an extension to your LLM, securely fed with your business’s internal documents. When you pose a query to the RAG system that’s specific to your business, products, or services it draws from those provided documents to deliver accurate and relevant responses that standard pre-trained models cannot offer. With an on-premises RAG solution, your queries and data remain securely within your local infrastructure, ensuring they are not released to the cloud.