What’s the Difference Between NVIDIA HGX H200 and H200 NVL?

NVIDIA’s Hopper architecture has redefined AI and high-performance computing (HPC), with GPUs like the NVIDIA HGX H200 and the new NVIDIA H200 NVL addressing different needs in the enterprise and research sectors. While the HGX H200 offers a high-performance foundation for both liquid- and air-cooled environments, the H200 NVL introduces a cost-effective, lower-power option designed for air-cooled enterprise racks.

NVIDIA H200 NVL Overview

The newly introduced NVIDIA H200 NVL offers a different approach, catering to data centers with air-cooled, lower-power rack designs. It features:

- PCIe GPUs connected via NVIDIA NVLink for fast GPU-to-GPU communication, offering 7x faster inter-GPU bandwidth compared to PCIe Gen5.

- Optimized for racks under 20kW, a common configuration in enterprise environments.

- 1.7x faster inference performance and 1.3x better HPC performance compared to the H100 NVL.

- Flexible configurations, allowing deployment with 1, 2, 4, or 8 GPUs, providing enterprises the ability to scale based on workload needs.

- Energy efficiency, with the option to run GPUs at lower power envelopes (as low as 400W), maintaining performance while reducing energy costs.

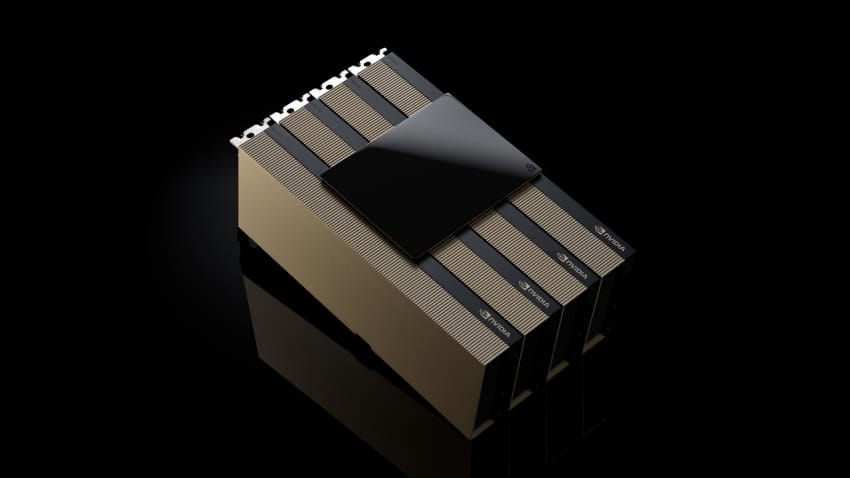

NVIDIA HGX H200 Overview

The NVIDIA HGX H200 has established itself as a leading solution for high-performance AI and HPC environments. It is purpose-built for enterprises and institutions requiring advanced levels of compute and future scalability.

Key features include:

- 141 GB of HBM3e memory and 4.8 TB/s bandwidth for rapid data processing.

- Up to 4 petaFLOPS of FP8 performance, ensuring smooth handling of large language models and HPC simulations.

- Scalability options for liquid- and air-cooled configurations, supporting 4- or 8-GPU setups in NVIDIA HGX-certified systems such as the AceleMax® AXG-728IS.

The HGX H200 is currently the go-to solution for many running demanding workloads such as generative AI model training, scientific simulations, and large-scale inference on-prem.

Comparing the NVIDIA HGX H200 and H200 NVL

Key Features

| Feature | NVIDIA HGX H200 | NVIDIA H200 NVL |

|---|---|---|

| Memory | 141 GB HBM3e | 141 GB HBM3e |

| GPU Configuration | 8 GPUs (SXM-based) | 1-8 GPUs (PCIe-based) |

| Cooling | Liquid or air cooling | Air cooling |

| Performance | Maximum scalability and power | Energy-efficient flexibility |

| Connectivity | NVLink (900 GB/s) | NVLink PCIe bridge (900 GB/s) |

| Form Factor | NVIDIA HGX-certified servers | Standard enterprise racks |

| Power Range | Fixed | Configurable (400W-600W) |

Specifications

| Feature | H200 SXM | H200 NVL |

|---|---|---|

| FP64 | 34 TFLOPS | 30 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS | 60 TFLOPS |

| FP32 | 67 TFLOPS | 60 TFLOPS |

| TF32 Tensor Core² | 989 TFLOPS | 835 TFLOPS |

| BFLOAT16 Tensor Core² | 1,979 TFLOPS | 1,671 TFLOPS |

| FP16 Tensor Core² | 1,979 TFLOPS | 1,671 TFLOPS |

| FP8 Tensor Core² | 3,958 TFLOPS | 3,341 TFLOPS |

| INT8 Tensor Core² | 3,958 TFLOPS | 3,341 TFLOPS |

| GPU Memory | 141 GB | 141 GB |

| GPU Memory Bandwidth | 4.8 TB/s | 4.8 TB/s |

| Decoders | 7 NVDEC / 7 JPEG | 7 NVDEC / 7 JPEG |

| Confidential Computing | Supported | Supported |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) | Up to 600W (configurable) |

| Multi-Instance GPUs (MIG) | Up to 7 MIGs @ 18 GB each | Up to 7 MIGs @ 16.5 GB each |

| Form Factor | SXM | PCIe (Dual-slot air-cooled) |

| Interconnect | NVIDIA NVLink™: 900 GB/s PCIe Gen5: 128 GB/s |

2- or 4-way NVIDIA NVLink bridge: 900 GB/s per GPU PCIe Gen5: 128 GB/s |

| Server Options | NVIDIA HGX™ H200 systems with 4 or 8 GPUs | NVIDIA MGX™ H200 NVL systems with up to 8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |

Choosing the Right GPU for Your Needs

When deciding between the HGX H200 and the H200 NVL, consider your data center requirements:

- Choose HGX H200 if your workloads demand top-tier performance, scalability, and flexibility for either liquid or air-cooled environments.

- Opt for H200 NVL if your organization prioritizes lower power consumption, air cooling, and flexible rack configurations.

How AMAX Simplifies Your GPU Deployment

AMAX specializes in designing and deploying AI and HPC solutions using NVIDIA GPUs. Whether integrating the HGX H200 for large-scale research or implementing the H200 NVL for enterprise data centers, AMAX offers:

- Custom server design tailored to your workload requirements.

- Deployment and support services to ensure optimal performance from day one.

- Advanced cooling solutions, including liquid and air-cooled configurations.

With AMAX’s expertise, organizations can maximize the potential of NVIDIA Hopper GPUs while optimizing energy efficiency and cost.

Unlock AI and HPC Efficiency with NVIDIA and AMAX

Both the NVIDIA HGX H200 and H200 NVL provide industry-leading capabilities for AI and HPC. The HGX H200 is designed for peak performance and cooling flexibility, while the new H200 NVL offers a versatile option for air-cooled enterprise setups.

AMAX is here to guide your organization in selecting, deploying, and optimizing NVIDIA GPU solutions.