When considering upgrades for your data center, understanding the full spectrum of GPU options is crucial, especially when GPU technology continually evolves to address the complex demands of intensive workloads. In this blog, we delve into a comprehensive comparison between two significant NVIDIA GPUs: the NVIDIA L40S and the NVIDIA A100 Tensor Core GPU. Each of these GPUs brings its unique strengths to the table, engineered to meet specific demands of AI, graphics, and high-performance computing. We'll explore their features, ideal use cases, and technical specifications to guide you in identifying which GPU best fits your organizational needs. For those looking to stay ahead of the latest in GPU technology, check out our NVIDIA H100 vs NVIDIA H200 comparison, offering insights into NVIDIA's newest models and their advanced capabilities.

NVIDIA L40S Overview

The NVIDIA L40S GPU offers notable advancements in the space of AI and graphics for data centers, emphasizing versatility across various workloads. This GPU is designed to address a broad spectrum of data center needs, rather than focusing solely on raw power. The NVIDIA L40S skillfully balances advanced AI computing with quality graphics and media acceleration. This combination makes it particularly suitable for tasks ranging from generative AI and large language model training and inference to 3D graphics, rendering, and video processing.

Specific Use Cases for the NVIDIA L40S

- Generative AI Applications: The NVIDIA L40S GPU is adept at handling the demands of generative AI, providing the computational power needed for developing new services, insights, and original content.

- Large Language Model (LLM) Training and Inference: For enterprises exploring the expanding world of natural language processing, the NVIDIA L40S offers the necessary resources for both training and deploying large language models.

- 3D Graphics and Rendering: The GPU's capabilities extend to high-fidelity creative workflows, including 3D modeling and rendering, making it a solid choice for tasks in animation studios, architectural visualization, and product design.

- Video Processing: With its media acceleration features, the NVIDIA L40S is well-suited for video processing tasks, catering to the needs of content creation and streaming services.

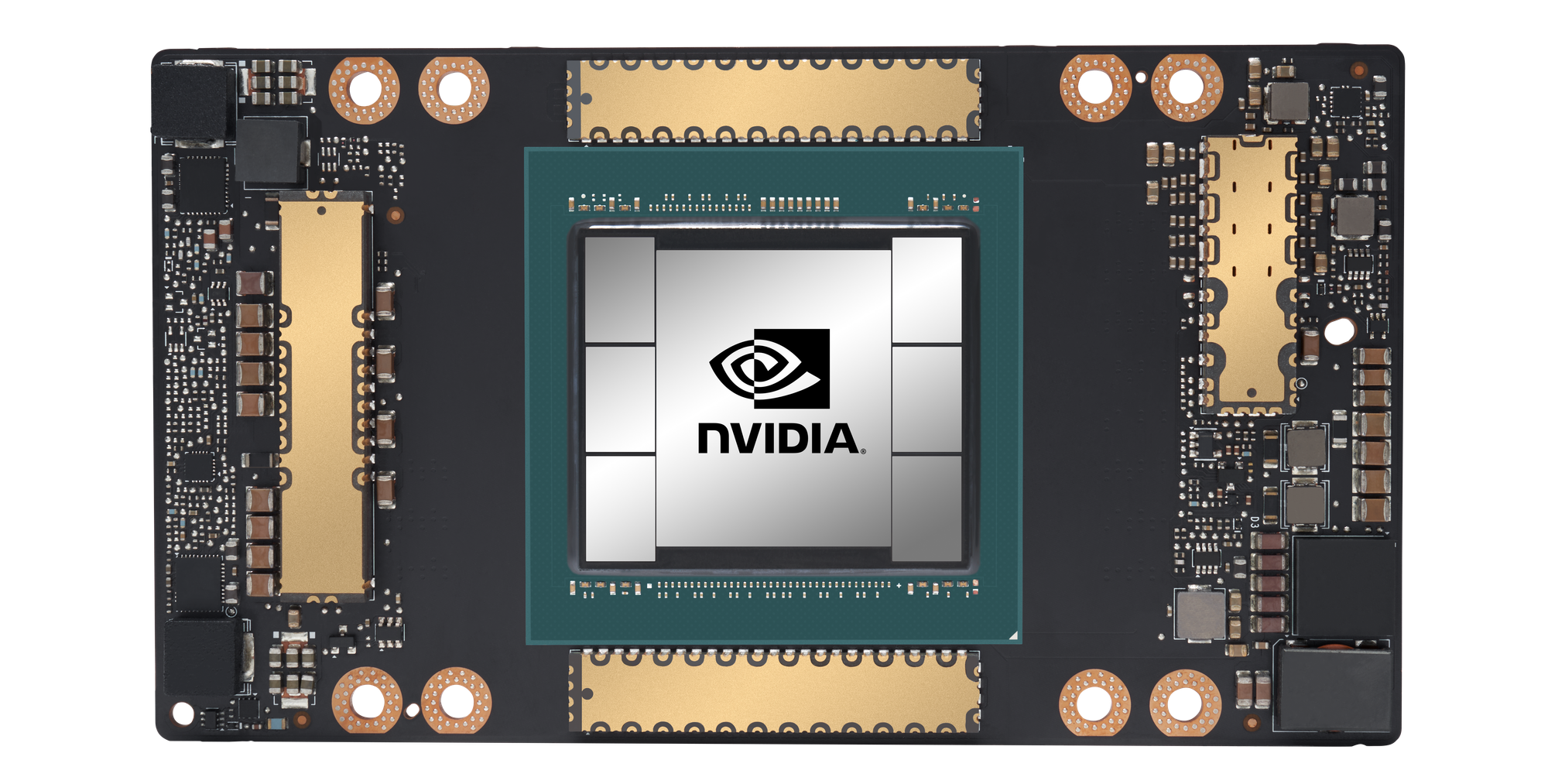

NVIDIA A100 Overview

The NVIDIA A100 GPU is a focused solution for AI, data analytics, and high-performance computing (HPC) in data centers. It excels in delivering efficient and scalable performance for specialized workloads. The NVIDIA A100 doesn't claim to be a jack-of-all-trades but rather specializes in tasks where deep learning, complex data analysis, and computational power are paramount. Its design and capabilities make it particularly effective for large-scale AI models and HPC applications, offering a significant performance boost in these specific areas.

Specific Use Cases for the NVIDIA A100

- AI Training and Inference: The NVIDIA A100 is engineered for complex AI workloads, excelling in both training and inference phases of large AI models. Its architecture is optimized for the rapid processing of AI tasks, making it ideal for deep learning applications.

- High-Performance Computing (HPC): For scientific research and simulations, the NVIDIA A100 provides robust computational power. It is well-suited for tasks in areas like climate modeling, genomic research, and physics simulations, where rapid data processing and analysis are crucial.

- Data Analytics: The NVIDIA A100’s ability to handle massive datasets efficiently makes it a strong contender for big data analytics. It can significantly reduce the time required for data processing, aiding in quicker decision-making and insight generation.

- Energy-Efficient Performance: Despite its high performance, the NVIDIA A100 is designed with energy efficiency in mind. This aspect is particularly important in large-scale data center operations where power consumption is a critical factor.

Performance

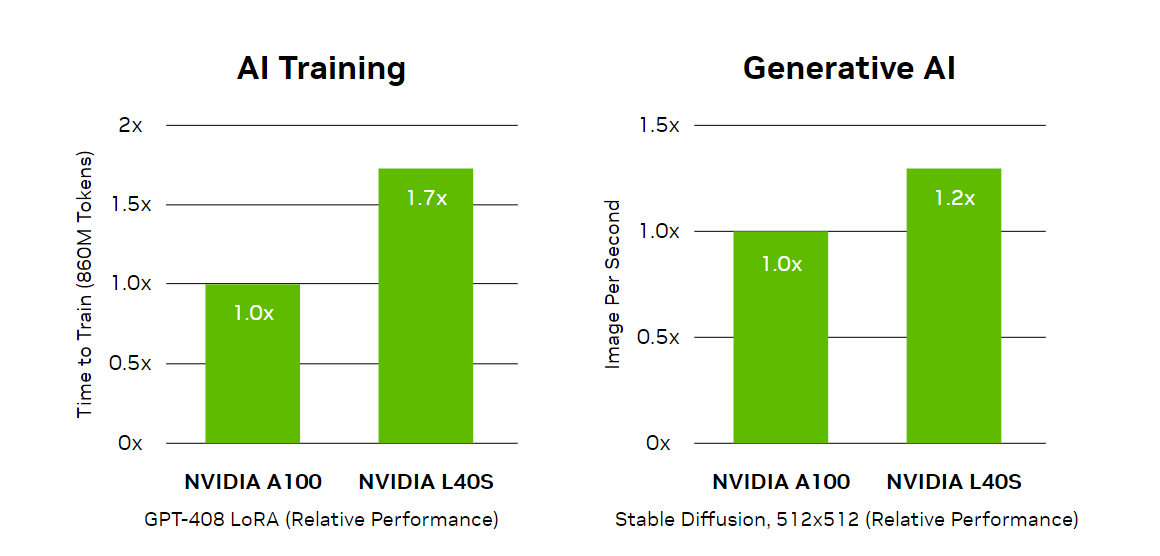

The NVIDIA L40S GPU, with its 1,466 TFLOPS Tensor Performance, excels in AI and graphics-intensive workloads, making it ideal for emerging applications in generative AI and advanced graphics. On the other hand, the NVIDIA A100's 19.5 TFLOPS FP64 performance and 156 TFLOPS TF32 Tensor Core performance make it a formidable tool for AI training and high-performance computing tasks.

Tech Spec Analysis

| Specs | NVIDIA L40S | NVIDIA A100 |

|---|---|---|

| GPU Architecture | NVIDIA Ada Lovelace Architecture | NVIDIA Ampere Architecture |

| GPU Memory | 48GB GDDR6 with ECC | 80GB HBM2e |

| Memory Bandwidth | 864GB/s | Over 2TB/s (2048GB/s) |

| Tensor Core Performance (With Sparsity) | FP8 - 1,466 TFLOPS; FP16 - 733 TFLOPS | FP16 Tensor Core - 312 TFLOPS; TF32 Tensor Core - 312 TFLOPS |

| Single-Precision (FP32) Performance | 91.6 TFLOPS | 19.5 TFLOPS |

| Ray-Tracing (RT Core) Performance | 212 TFLOPS | Not applicable |

| Maximum Power Consumption | 350W | 400W (standard configuration) |

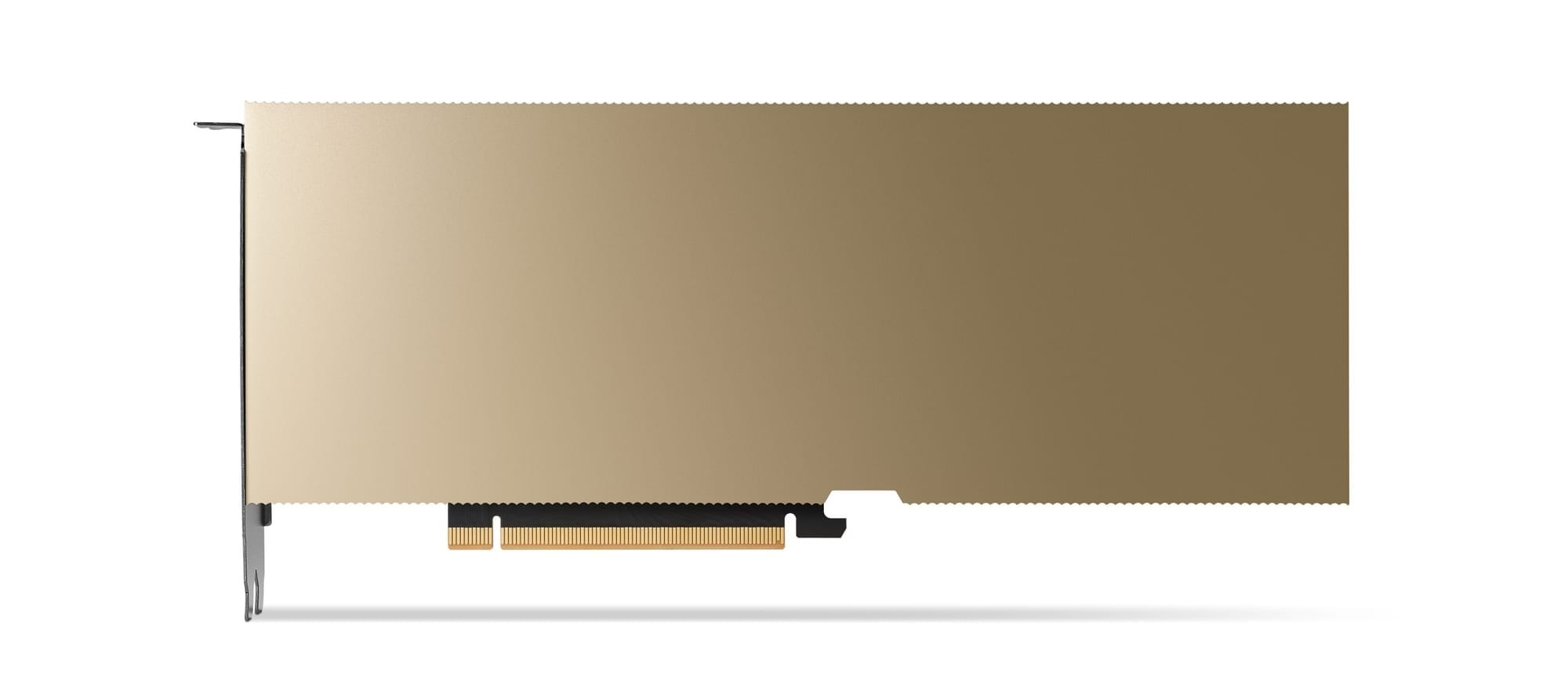

| Form Factor | Dual-slot | Dual-slot PCIe, SXM module for HGX platform |

| Interconnect | PCIe Gen4 x16 | NVIDIA® NVLink® Bridge for 2 GPUs: 600 GB/s; PCIe Gen4 |

| Key Features | Fourth-Gen Tensor Cores, Third-Gen RT Cores, Transformer Engine, Data Center Efficiency and Security, DLSS 3 support | Multi-Instance GPU (MIG) capability, Tensor Float (TF32) support, NVLink®, NVSwitch™, InfiniBand®, Magnum IO™ SDK compatibility |

AMAX's Integration Expertise

At AMAX, we specialize in integrating these advanced NVIDIA GPUs into customized IT solutions. Our approach ensures optimal performance for specific applications, be it AI, HPC, or graphics-intensive workloads. Our expertise in advanced cooling technologies further enhances the performance and longevity of these powerful GPUs from data centers to workstations.

Fine-Tuning the Right GPU for Your Needs

Choosing between the NVIDIA L40S and NVIDIA A100 depends on your specific workload requirements. For enterprises looking to explore generative AI and advanced graphics, the NVIDIA L40S is a future-ready choice. However, for organizations focusing on diverse AI applications and HPC, the NVIDIA A100 remains a robust and versatile option.