Powering the Next Generation of AI

The NVIDIA DGX™ B200 is designed to address the escalating demands for compute capacity that modern AI workloads require, the DGX B200 delivers a combination of high-performance computing, storage, and networking capabilities. This unified AI platform is engineered to handle the complexities of training, fine-tuning, and inference phases of AI development, marking a significant milestone in generative AI.

Key Features at a Glance

- Eight NVIDIA Blackwell GPUs: Offering a groundbreaking 1.4 terabytes (TB) of GPU memory and 64 TB/s of memory bandwidth.

- Unmatched Performance: Achieves up to 72 petaFLOPS for training and 144 petaFLOPS for inference, showcasing superior AI computational power.

- Dual 5th Generation Intel® Xeon® Scalable Processors: Ensures powerful processing capabilities for the most demanding AI tasks.

- Comprehensive NVIDIA Networking: Facilitates seamless data flow across the AI infrastructure.

- Foundation for NVIDIA DGX Ecosystem: Integral to the NVIDIA DGX BasePOD™ and DGX SuperPOD™ architectures, providing scalable solutions for AI development.

Unparalleled AI Performance

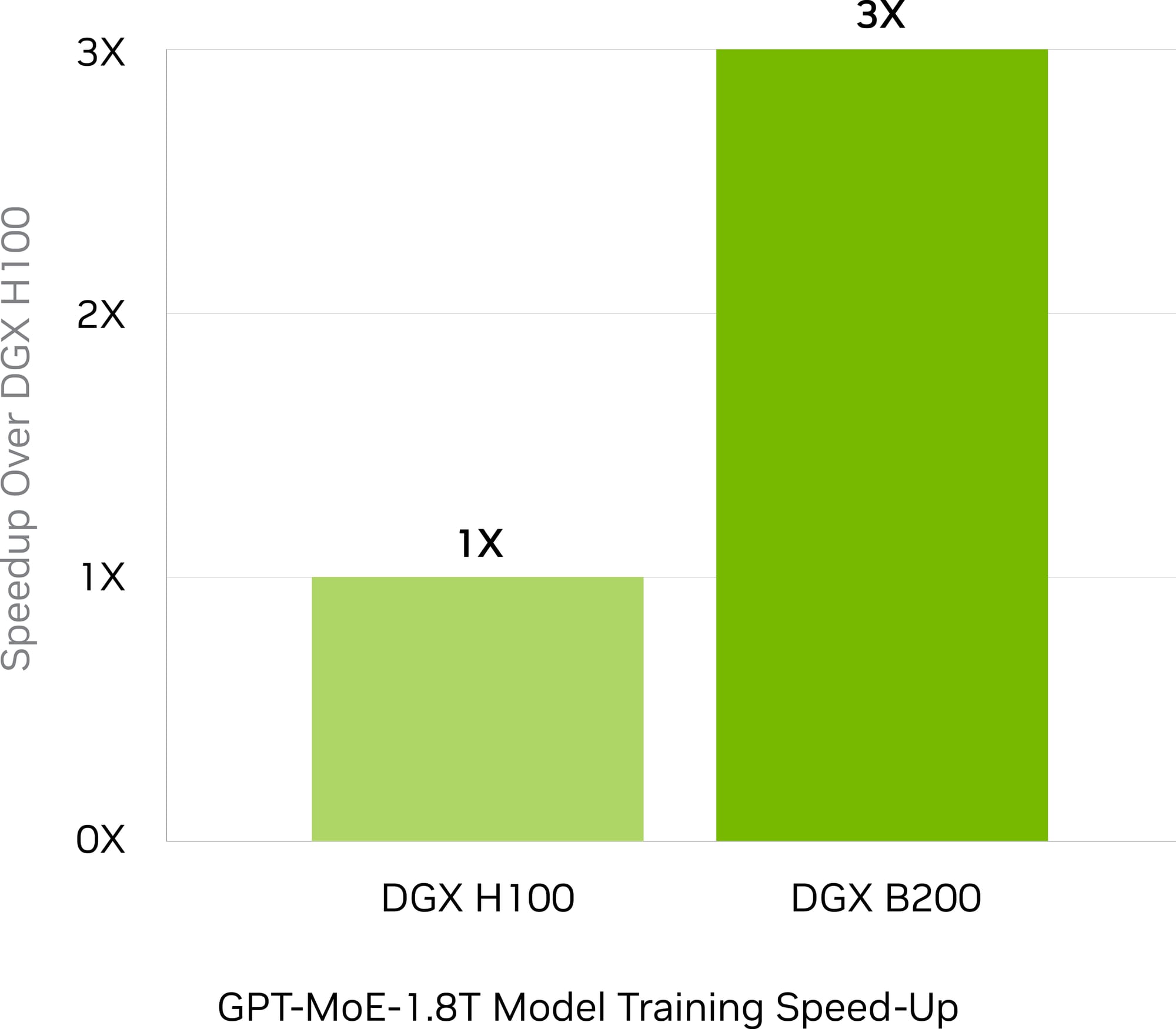

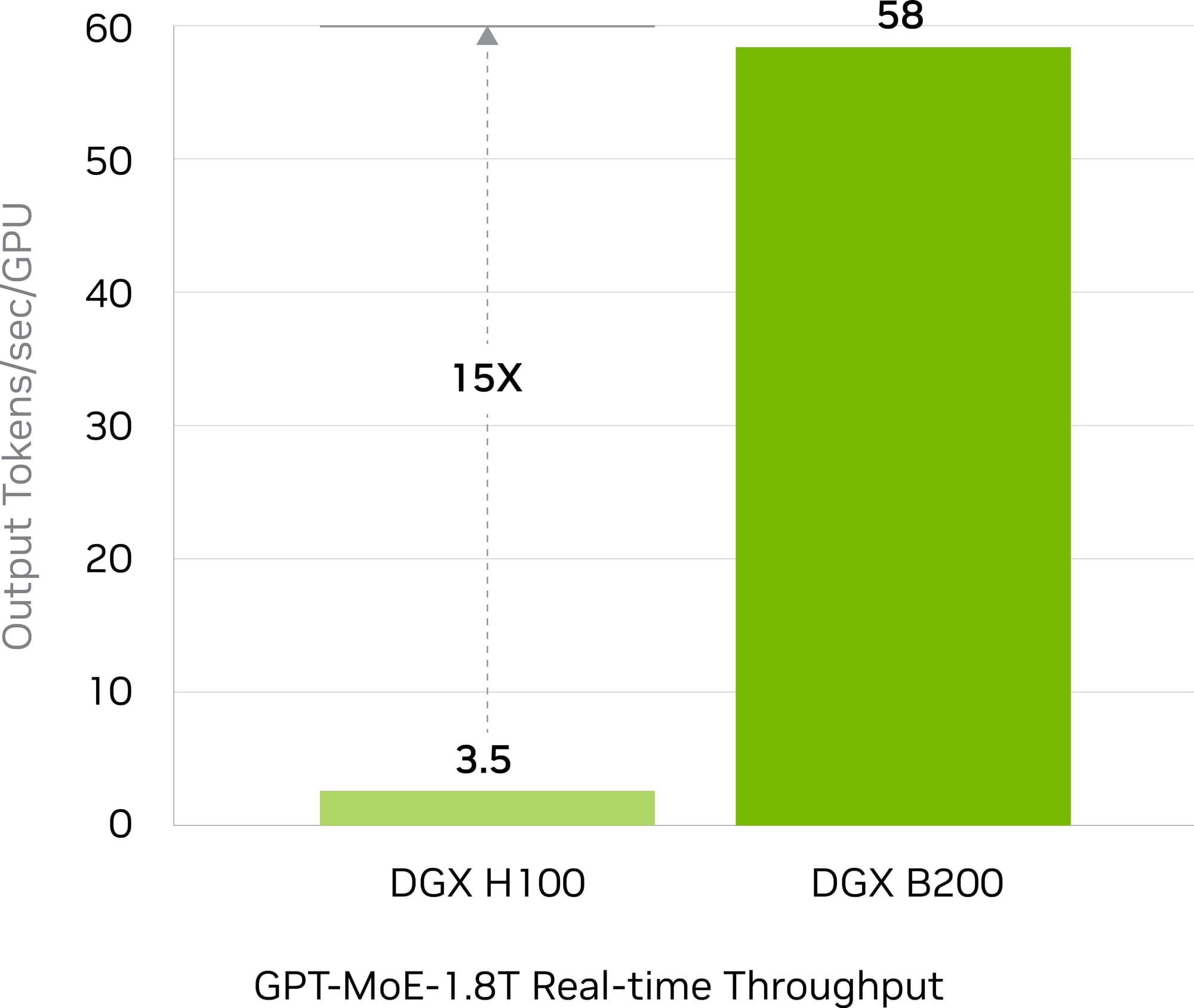

The DGX B200 represents NVIDIA's commitment to developing the world's most powerful supercomputers for AI. Powered by the NVIDIA Blackwell architecture, it delivers three times the training performance and fifteen times the inference performance compared to its predecessors.

This powerhouse of AI computing forms the core of NVIDIA's DGX POD™ reference architectures, offering scalable, high-speed solutions for turnkey AI infrastructures.

A Proven Infrastructure Standard

NVIDIA DGX B200 is a trailblazer, being the first system equipped with NVIDIA Blackwell GPUs. It not only tackles the most complex AI challenges, such as large language models and natural language processing but also offers a fully optimized platform. This includes NVIDIA's comprehensive AI software stack and a robust ecosystem of third-party support, ensuring businesses can navigate the most intricate problems with AI.

DGX B200 Technical Specifications

| Specification | Details |

|---|---|

| GPU | 8x NVIDIA Blackwell GPUs |

| GPU Memory | 1,440GB total |

| Performance | 72 petaFLOPS training and 144 petaFLOPS inference |

| NVIDIA® NVSwitch™ | 2x |

| System Power Usage | ~14.3kW max |

| CPU | 2 Intel® Xeon® Platinum 8570 Processors (112 Cores total, 2.1 GHz Base, 4 GHz Max Boost) |

| System Memory | Up to 4TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI (Up to 400Gb/s InfiniBand/Ethernet) 2x dual-port QSFP112 NVIDIA BlueField-3 DPU (Up to 400Gb/s InfiniBand/Ethernet) |

| Management Network | 10Gb/s onboard NIC with RJ45 100Gb/s dual-port ethernet NIC Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2 Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise – Optimized AI Software NVIDIA Base Command – Orchestration, Scheduling, and Cluster Management DGX OS / Ubuntu – Operating System |

| Rack Units (RU) | 10 RU |

| System Dimensions | Height: 17.5in (444mm) Width: 19.0in (482.2mm) Length: 35.3in (897.1mm) |

| Operating Temperature | 5–30°C (41–86°F) |

| Enterprise Support | Three-year Enterprise Business-Standard Support for hardware and software 24/7 Enterprise Support portal access Live agent support during local business hours |

Powered by NVIDIA Base Command

At the heart of the DGX platform is NVIDIA Base Command, a platform that encapsulates NVIDIA's software ingenuity. It provides enterprises with enterprise-grade orchestration, cluster management, and an AI-optimized operating system. Coupled with NVIDIA AI Enterprise, it streamlines AI development and deployment, maximizing the DGX infrastructure's potential.

The DGX Software Stack

The DGX Software Stack is designed to amplify the value of AI investments, offering a suite of tools for end-to-end infrastructure performance acceleration. From job scheduling with Kubernetes and SLURM to AI workflow management with NVIDIA TAO Toolkit and NVIDIA Triton™ Inference Server, it encompasses everything needed for successful AI projects.