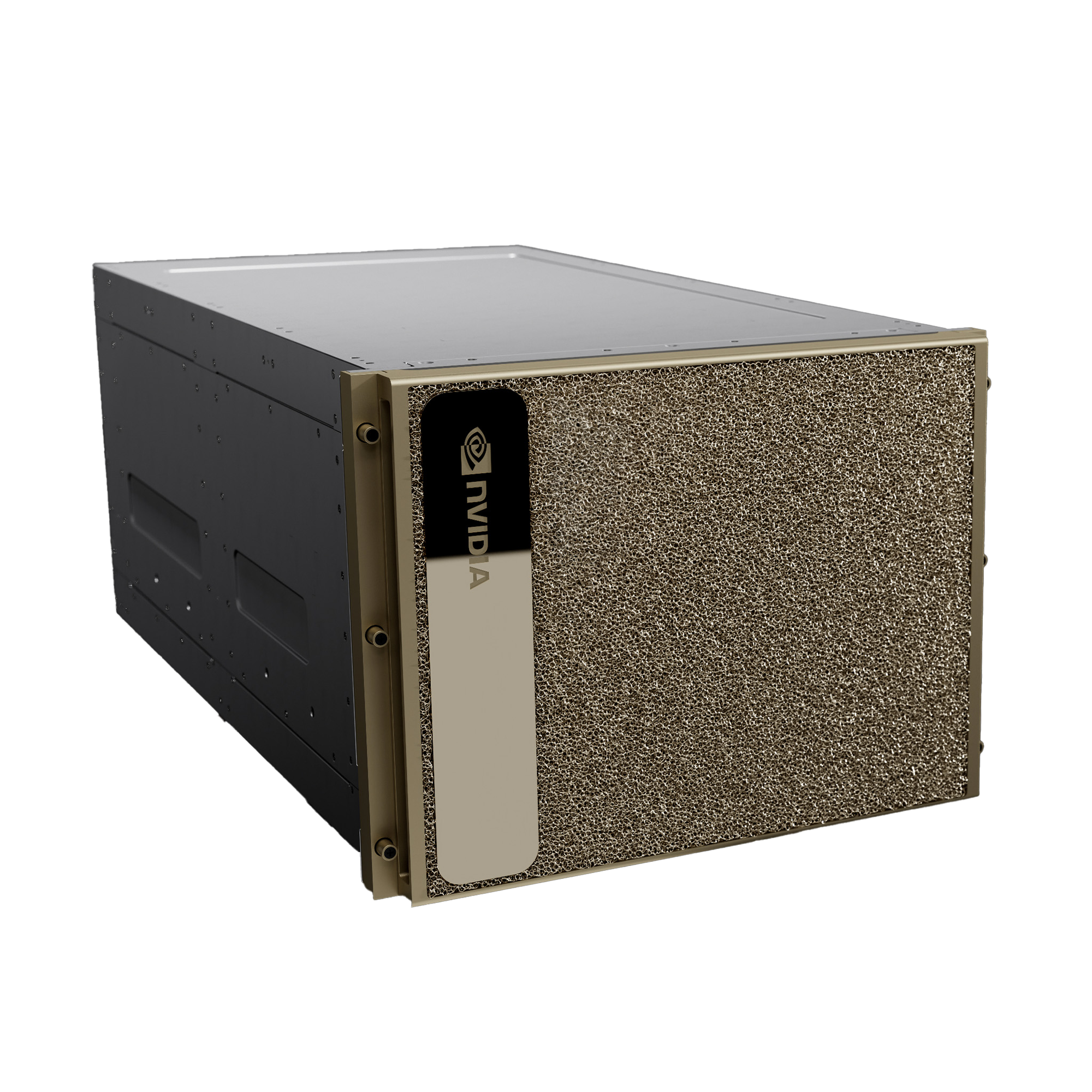

The Gold Standard for AI Infrastructure

NVIDIA DGX™ H200, a critical component of the NVIDIA DGX™ platform, serves as the cornerstone for business innovation and AI optimization. Featuring the advanced NVIDIA H200 Tensor Core GPU, this system is designed to maximize AI throughput, helping enterprises achieve remarkable breakthroughs in natural language processing, recommender systems, data analytics, and more. With options for on-premises and various other access and deployment strategies, the DGX H200 provides the necessary performance for enterprises to tackle their most complex challenges with AI.

The Foundation of your AI Factory

AI has transformed from a field of experimental science to a fundamental business tool used daily by companies, big and small, to fuel innovation and optimize operations. Positioned as the centerpiece of an enterprise AI center of excellence, the DGX H200 is part of the world’s first purpose-built AI infrastructure portfolio. It offers a fully optimized hardware and software platform including NVIDIA Enterprise Support, an extensive third-party ecosystem, and access to expert guidance from NVIDIA specialists, allowing organizations to address their most daunting and complex business problems with AI. DGX H200 is renowned for its proven reliability, utilized by a global client base across virtually all industries.

Breaking the Barriers to AI at Scale

- High Performance: Delivers 32 petaFLOPS of AI performance with the NVIDIA H200 Tensor Core GPU, offering twice the networking speed of the DGX A100.

- Advanced Networking: Features NVIDIA ConnectX®-7 SmartNICs, ensuring rapid scalability and enhanced performance across the board.

- Proven Scalability: Ideal for expanding NVIDIA DGX SuperPOD and DGX BasePOD capabilities, facilitating high-speed, large-scale operations.

Specifications

| Specification | Details |

|---|---|

| GPU | 8x NVIDIA H200 Tensor Core GPUs, 141GB each |

| GPU Memory | 1,128GB total |

| Performance | 32 petaFLOPS FP8 |

| NVIDIA® NVSwitch™ | 4x |

| System Power Usage | 10.2kW standard, up to 14.3kW with Custom Thermal Solution |

| CPU | Dual Intel® Xeon® Platinum 8480C Processors (112 Cores, up to 3.80 GHz Max Boost) |

| System Memory | 2TB |

| Networking | Up to 400Gb/s options |

| Management Network | Extensive management and connectivity options |

| Storage | Comprehensive internal and OS storage solutions |

| Software | NVIDIA AI Enterprise, Base Command, multiple OS options |

| Support | Standard 3-year hardware and software support |

| System Weight | 287.6lbs (130.45kgs) |

| Packaged System Weight | 376lbs (170.45kgs) |

| System Dimensions | Height: 14.0in, Width: 19.0in, Length: 35.3in |

| Operating Temperature Range | 5–30°C (41–86°F) |

Empowered by NVIDIA Base Command

NVIDIA Base Command facilitates the effective management of the DGX infrastructure, empowering organizations to fully leverage NVIDIA’s innovative software suite. This platform ensures streamlined AI development and deployment through advanced orchestration, cluster management, and a suite of tools designed to optimize compute, storage, and networking.

Leadership-Class Infrastructure by AMAX

AMAX offers the DGX H200 with versatile installation options, including on-premises management, colocation in NVIDIA DGX-Ready Data Centers, and access through certified managed service providers. This setup streamlines the integration of advanced AI into existing IT infrastructures, allowing organizations to utilize high-end AI solutions without overburdening their IT resources.