GPU Demands of Generative AI

AI has become a key component of the modern business strategy. Whether it's training and fine-tuning your own personalized models or utilizing AI inferencing to revolutionize business operations, AI is something every business must now account for.

To meet the escalating demands of AI compute requirements, many companies have become dependent on cloud service providers. According to a report from Cololib, in 2024, 94% of companies worldwide will use some form of cloud computing. However, cloud services can become increasingly expensive due to the pay-as-you-go model, data transfer fees, and the need to often over-provision resources.

Generative AI, in particular, requires significant GPU resources to train LLMs that consist of billions or even trillions of parameters. Building and maintaining such infrastructure can also be incredibly complex, highlighting the importance of expert support and solutions.

Let's explore the transition of a prominent generative AI software company as it moves from cloud-based operations to primarily on-premises solutions, made possible by AMAX. This shift allowed the company to better manage resources, control costs, and enhance security, demonstrating the benefits of on-premises solutions in AI development.

Comparing Cloud Services and On-Premises Hardware

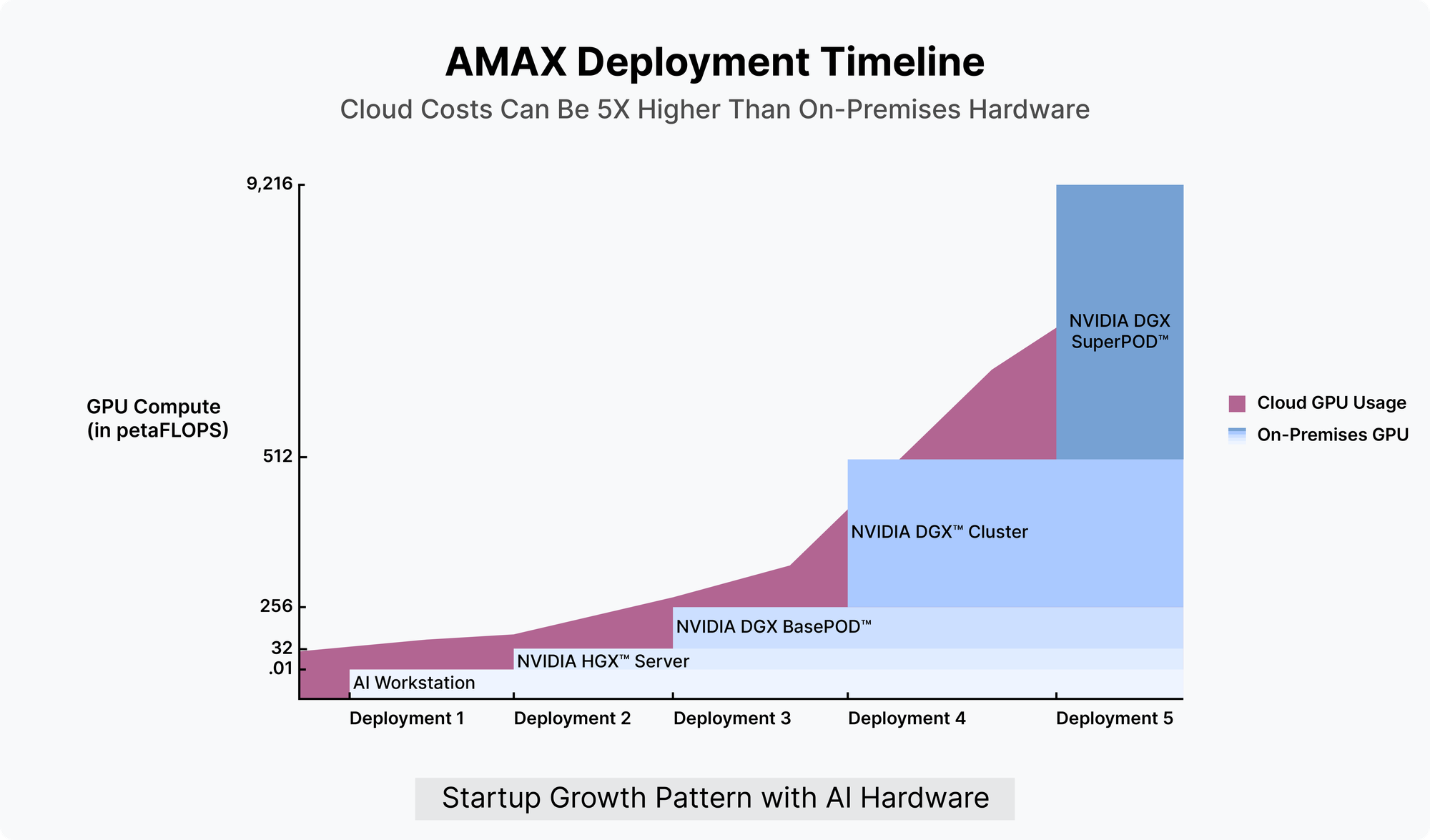

Today, pursuing a cloud-only approach for either training or inference on GPU compute does not make financial sense due to high cloud costs. The Total Cost of Ownership (TCO) for cloud services can be up to 5X higher than that of on-premises hardware, making on-premises solutions a much more cost-effective option for long term savings.

Cloud Services

Cloud services are attractive for their flexibility and low initial costs, making them ideal for startups and projects at the prototype stage. Key benefits include:

- Elastic Capacity: Scale resources automatically to meet current requirements.

- Low Upfront Costs: The pay-as-you-go model minimizes initial capital outlays.

- Broad Accessibility: Deploy and access GPU resources easily from anywhere in the world.

However, this convenience comes at a price. Cloud services can become outrageously expensive over time, especially for companies or organizations with consistent high-volume AI workloads. Additionally, concerns around security and data privacy are significant when handling sensitive information such as PII or HIPAA compliant data.

On-Premises Hardware

Alternatively, on-premises AI solutions offer significant long-term cost efficiency, unrestricted access, and enhanced security. Advantages include:

- Long-Term Savings: Reduced total cost of ownership over time.

- Optimized Efficiency: Systems engineered to maximize AI workload performance.

- Enhanced Data Control: Superior access and management over data and infrastructure.

For businesses with continuous and intensive AI demands, transitioning to on-premises hardware is realistically the only path forward. The consistent need for high-volume access in AI workloads often leads to skyrocketing costs when relying solely on cloud services. On-premises hardware, while requiring a higher initial investment, significantly reduces these long-term expenses by eliminating recurring cloud service fees. This infrastructure provides dedicated and consistent resources, ensuring uninterrupted access for AI training, fine tuning, and ML workloads.

Moving to the Data Center - Price, Performance, and Access

Deployment Timeline

The transition was executed in phases, aligning with the company’s growth trajectory:

- Deployment 1: .01 petaFLOPS

- Deployment 2: 32 petaFLOPS

- Deployment 3: 256 petaFLOPS

- Deployment 4: 512 petaFLOPS

- Future Deployment: 9,216 petaFLOPS

Realized Benefits

Transitioning from cloud to on-premises systems enhances performance, cost management, and gives exclusive access to hardware. Our customer began with minimal on-premises hardware and supplemented GPU demands with cloud services while testing their applications. After increasing their investment in on-premises hardware, they briefly experienced a surplus of GPU capabilities. However, as their operations expanded, this capacity was quickly absorbed, bringing their GPU capacity back to just meeting demand in a matter of months. Given that the cloud resources can be 5x more expensive than on-premises hardware, it is important to have infrastructure in place as quickly as possible.

Planning Your AI Deployment with AMAX

As your company looks to scale its Gen AI capabilities, consider the comprehensive technical expertise that AMAX offers. Our services encompass data center layout design, cluster architecture, network topology design, bring-up, performance tuning, and liquid and air cooled facility retrofit solutions. We also provide co-location services for site hosting. Partnering with AMAX ensures access to top-tier infrastructure solutions that are essential for advanced AI deployments.