Overview

The NVIDIA GB200 NVL72 platform delivers real-time training and inference performance for trillion-parameter AI models but requires liquid cooling to operate efficiently, a capability many data centers are not yet equipped for. To address this gap, AMAX developed a solution that brings liquid-cooled infrastructure into environments originally designed for air-cooled systems. The LiquidMax® ALC-B4872 GB200 NVL72 AI POD features a liquid-to-air cooling system that enables full rack-scale deployment of GB200 servers without the need for facility liquid loops. This approach allows organizations to adopt the latest generation of AI hardware using existing CRAC infrastructure, reducing complexity while accelerating time to deployment.

The LiquidMax® ALC-B4872 GB200 NVL72 AI POD is pre-configured with 36 Grace Blackwell Superchips featuring 72 NVIDIA Blackwell GPUs and 36 Grace CPUs, interconnected by 5th-generation NVLink. This advanced solution is designed to deliver cutting-edge performance for workloads such as:

- Large Language Model (LLM) Inference

- Retrieval-Augmented Generation (RAG)

- High-speed data processing

With its scale-out, single-node NVIDIA MGX architecture, AMAX’s LiquidMax® ALC-B4872 GB200 NVL72 AI POD enables a wide variety of system designs and networking options to integrate into existing data center infrastructure.

In addition, the LiquidMax® ALC-B4872 GB200 NVL72 AI POD includes NVIDIA BlueField®-3 data processing units to enable cloud network acceleration, composable storage, zero-trust security and GPU compute elasticity in hyperscale AI clouds.

POD Configuration

Key Features

- Up to 30x Performance Increase for LLM inference workloads

- 25x Reduction in Cost and Energy Consumption compared to NVIDIA H100

- Scale-Out, Single-Node NVIDIA MGX Architecture for flexible deployment options

- Efficient Liquid-to-Air (L2A) Cooling for high-density AI and HPC workloads

Per Rack

| Component | Details |

|---|---|

| Compute Trays | 18 |

| Switch Trays | 9 |

| Cooling | Liquid-to-Air (L2A) |

Per Tray

| Component | Details |

|---|---|

| NVIDIA GPUs | 4 NVIDIA Blackwell GPUs |

| CPUs | 2 Grace CPUs |

| Memory | 960GB LPDDR5X + 288GB HBM3e |

| Networking | 2x NVIDIA ConnectX®-7 NIC (400GbE) + 2x BlueField®-3 DPU NICs (400GbE) |

Grace Blackwell Superchip Performance

LLM Inference

vs. NVIDIA H100 Tensor Core GPU

LLM Training

vs. H100

Energy Efficiency

vs. H100

Data Processing

vs. CPU

Blackwell GPU Performance

| Metric | Per Rack | Per Tray |

|---|---|---|

| FP4 Tensor Core | 1,440 PFLOPS | 40 PFLOPS |

| FP8/FP6 Tensor Core | 720 PFLOPS | 20 PFLOPS |

| INT8 Tensor Core | 720 POPS | 20 POPS |

| FP16/BF16 Tensor Core | 360 PFLOPS | 10 PFLOPS |

| TF32 Tensor Core | 180 PFLOPS | 5 PFLOPS |

| FP32 | 6,480 TFLOPS | 180 TFLOPS |

| FP64 | 3,240 TFLOPS | 90 TFLOPS |

Grace CPU Specifications

| Metric | Per Rack | Per Tray |

|---|---|---|

| CPU Core Count | 2,592 Arm® Neoverse V2 cores | 72 cores |

| CPU Memory | Up to 17 TB LPDDR5X | Up to 480GB LPDDR5X |

| Memory Bandwidth | Up to 18.4 TB/s | Up to 512 GB/s |

Per Rack Memory and Bandwidth

- Up to 13.5 TB HBM3e with bandwidth up to 576 TB/s

- NVLink Bandwidth: Up to 130 TB/s

Liquid-to-Air Cooling Solution

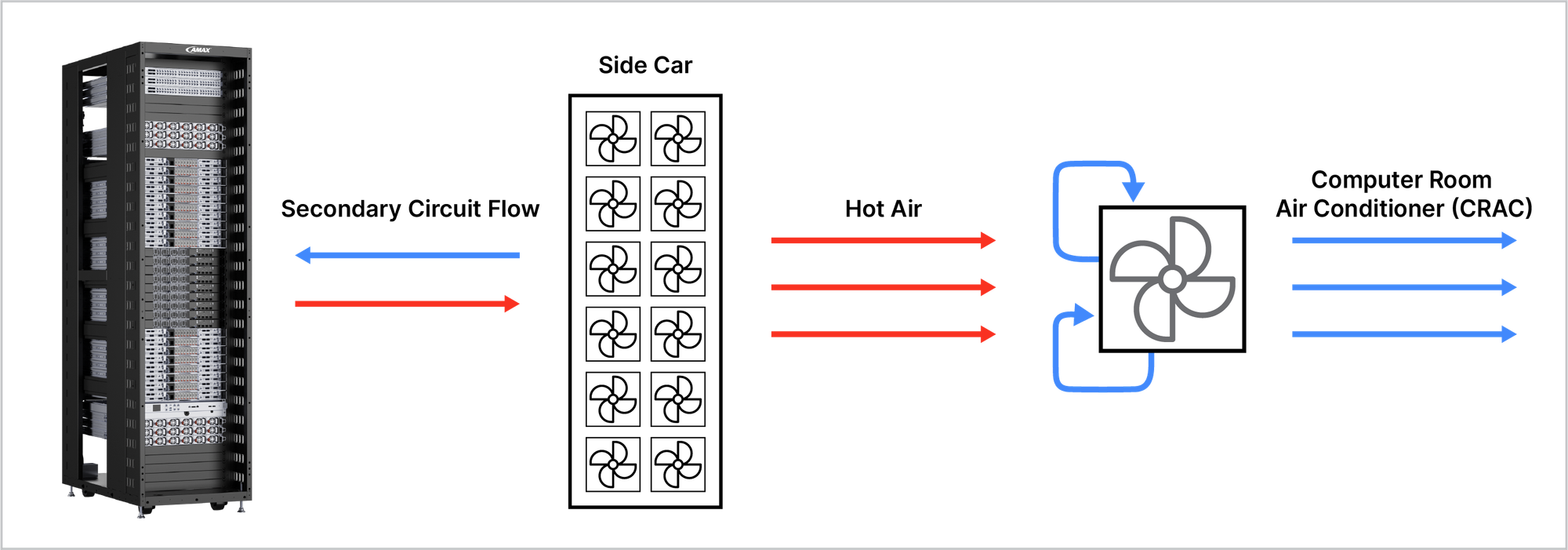

AMAX’s LiquidMax® ALC-B4872 GB200 NVL72 AI POD with Liquid-to-Air (L2A) Cooling provides an efficient thermal management solution for high-performance data centers.

The system circulates liquid coolant to absorb heat from high performance components within the rack. This heat is then transferred to the air via a sidecar cooling unit, which expels the hot air into the Computer Room Air Conditioner (CRAC) for final dissipation. This efficient design provides a cost-effective and scalable cooling solution for modern data centers.

Liquid-to-Air CDU

The LiquidMax® ALC-B4872 GB200 NVL72 AI POD uses an innovative Liquid-to-Air (L2A) cooling solution:

- Cooling Capacity: Up to 240 kW for 2-4 racks

- No need for facility liquid integration

- Scalable and cost-effective for modern data centers

| Specification | CDU Option 1 | CDU Option 2 |

|---|---|---|

| Power Consumption | 11.32 kVA | 11.32 kVA |

| Racks Managed | Up to 2 | Up to 4 |

Why Choose AMAX?

AMAX combines innovative engineering with industry-leading hardware such as the NVIDIA GB200 NVL72 to deliver scalable solutions tailored to your unique needs. From design to deployment, AMAX provides expert support every step of the way.

- 10+ Years Experience with HPC Liquid Cooling

- On-Site Installation & Cluster Bring-up

- Network Topology

- Bi-directional Logistics

- Testing & Validation

- Maintenance & Upgrades

- Troubleshooting & Repair Services