AI Accelerator Overview

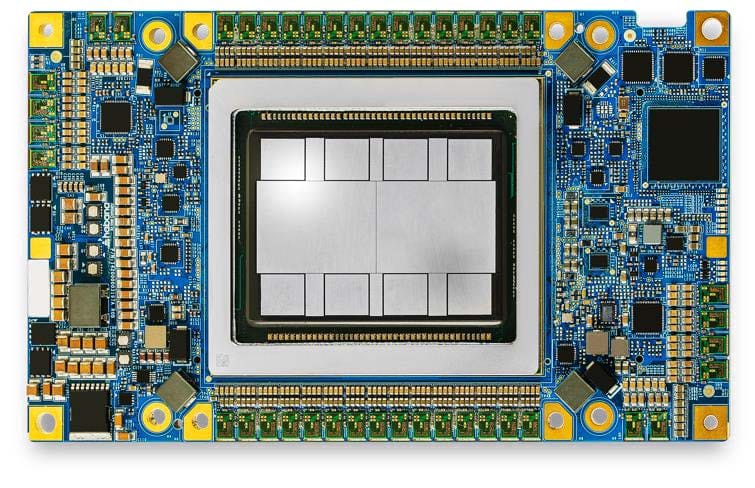

As AI continues to expand its influence across industries, the demand for high-performance hardware is at an all-time high. While NVIDIA has set the pace with its H200 and B200 GPUs, other players like AMD and Intel® are advancing their own accelerators to meet the growing needs of AI-driven businesses. Intel® enters this competitive field with the release of the Gaudi® 3 AI accelerator, an evolution from the previous Gaudi® 2.

Designed to support enterprise-level AI workloads, the Gaudi® 3 focuses on enhanced compute power, expanded memory capacity, and advanced networking features. These improvements make it a critical component for businesses looking to scale their AI infrastructure, offering a competitive alternative to the existing heavyweights in the market. As enterprises move toward handling increasingly complex AI tasks, the Gaudi® 3 is poised to deliver the performance necessary to stay ahead.

Key Innovations in Gaudi® 3

The Intel® Gaudi® 3 AI accelerator introduces a number of architectural upgrades over Gaudi® 2, optimized for high-performance AI workloads. Built using a 5nm process, Gaudi® 3 offers greater compute power and memory capacity, enhancing its ability to manage more complex models.

Compute and Memory Enhancements

Gaudi® 3 includes 64 Tensor Processor Cores (TPCs) and 8 Matrix Multiplication Engines (MMEs), which enable 1.8 PFlops of FP8 and BF16 compute. This is a significant increase in computational performance compared to Gaudi® 2. Additionally, with 128 GB of HBM2e memory and 3.7 TB/s bandwidth, Gaudi® 3 delivers 1.5x the memory bandwidth and 33% more memory capacity than its predecessor, allowing for the training and deployment of larger AI models with improved efficiency.

These upgrades make Gaudi® 3 particularly suited for large-scale AI applications, such as generative AI models and large language models (LLMs), where data and model sizes continue to grow.

Supporting AI Workloads with Gaudi® 3

Gaudi® 3 is designed to handle training and inference for a wide range of AI applications. From training models for natural language processing (NLP) to managing generative AI tasks, the improvements in Gaudi® 3 translate to more efficient processing and faster execution times.

Networking and Scalability

Gaudi® 3 provides enhanced networking capabilities, featuring 24x 200 Gbps Ethernet ports. This allows enterprises to scale AI operations using standard Ethernet networking, eliminating the need for proprietary solutions like InfiniBand. The flexibility to scale from a single node to thousands of nodes makes Gaudi® 3 a suitable choice for enterprises looking to expand their AI infrastructure without significant hardware changes.

Comparison of Gaudi® 2 and Gaudi® 3

Compared to Gaudi® 2, Gaudi® 3 brings notable enhancements in performance and efficiency. With its expanded memory, bandwidth, and compute power, Gaudi® 3 addresses the growing computational demands of modern AI workloads. These improvements make Gaudi® 3 a more capable solution for enterprises managing AI training and inference, particularly with larger models and datasets.

| Feature / Product | Intel® Gaudi® 2 Accelerator | Intel® Gaudi® 3 Accelerator |

|---|---|---|

| BF16 MME TFLOPs | 432 | 1835 |

| FP8 MME TFLOPs | 865 | 1835 |

| BF16 Vector TFLOPs | 11 | 28.7 |

| MME Units | 2 | 8 |

| TPC Units | 24 | 64 |

| HBM Capacity | 96 GB | 128 GB |

| HBM Bandwidth | 2.46 TB/s | 3.7 TB/s |

| On-die SRAM Capacity | 48 MB | 96 MB |

| On-die SRAM Bandwidth | 6.4 TB/s | 12.8 TB/s |

| Networking | 600 GB/s bidirectional | 1200 GB/s bidirectional |

| Host Interface | PCIe Gen4 X16 | PCIe Gen5 X16 |

| Host Interface Peak BW | 64 GB/s (32 GB/s per direction) | 128 GB/s (64 GB/s per direction) |

| Media | 8 Decoders | 14 Decoders |

AMAX’s Intel® Gaudi® 3 Solution

For organizations seeking an AI solution that leverages the performance of Intel® Gaudi® 3, AMAX offers the AceleMax® AXG-8281G. This 8U dual-processor server integrates 8 Intel® Gaudi® 3 HL-325L air-cooled accelerators, providing a powerful platform for AI training and inference at scale.

Key Specifications of AceleMax® AXG-8281G:

- GPU: 8 Intel® Gaudi® 3 accelerators, offering enhanced performance for demanding AI tasks.

- Memory: Supports up to 6TB of memory and features over 1TB of HBM capacity per universal baseboard with 29.6 TB/s HBM bandwidth, optimizing efficiency for LLMs and other large-scale AI workloads.

- Scalability: Includes 6 on-board OSFP 800GbE ports, designed for scale-out configurations, making it suitable for both small and large AI deployments.

- Workloads: Ideal for large-scale AI model training and inferencing, including generative AI applications.

The AceleMax® AXG-8281G is designed to meet the needs of enterprises looking to optimize their AI infrastructure with Intel® Gaudi® 3 technology. Its scalability and performance make it a strong candidate for organizations managing intensive AI workloads.

The Next Step for Intel® AI Accelerators

The Intel® Gaudi® 3 AI accelerator represents a step forward in AI acceleration, offering greater memory, bandwidth, and compute performance than Gaudi® 2. These improvements provide a solid foundation for enterprises working with large AI models, enabling more efficient training and inference.

With products like the AceleMax® AXG-8281G, powered by Intel® Gaudi® 3, AMAX offers a complete AI solution that supports both performance and scalability. This makes Gaudi® 3 a practical choice for enterprises ready to take the next step in AI infrastructure.